A Secure & Self-Hosted AI Assistant for CRM

- Data, AI & Analytics

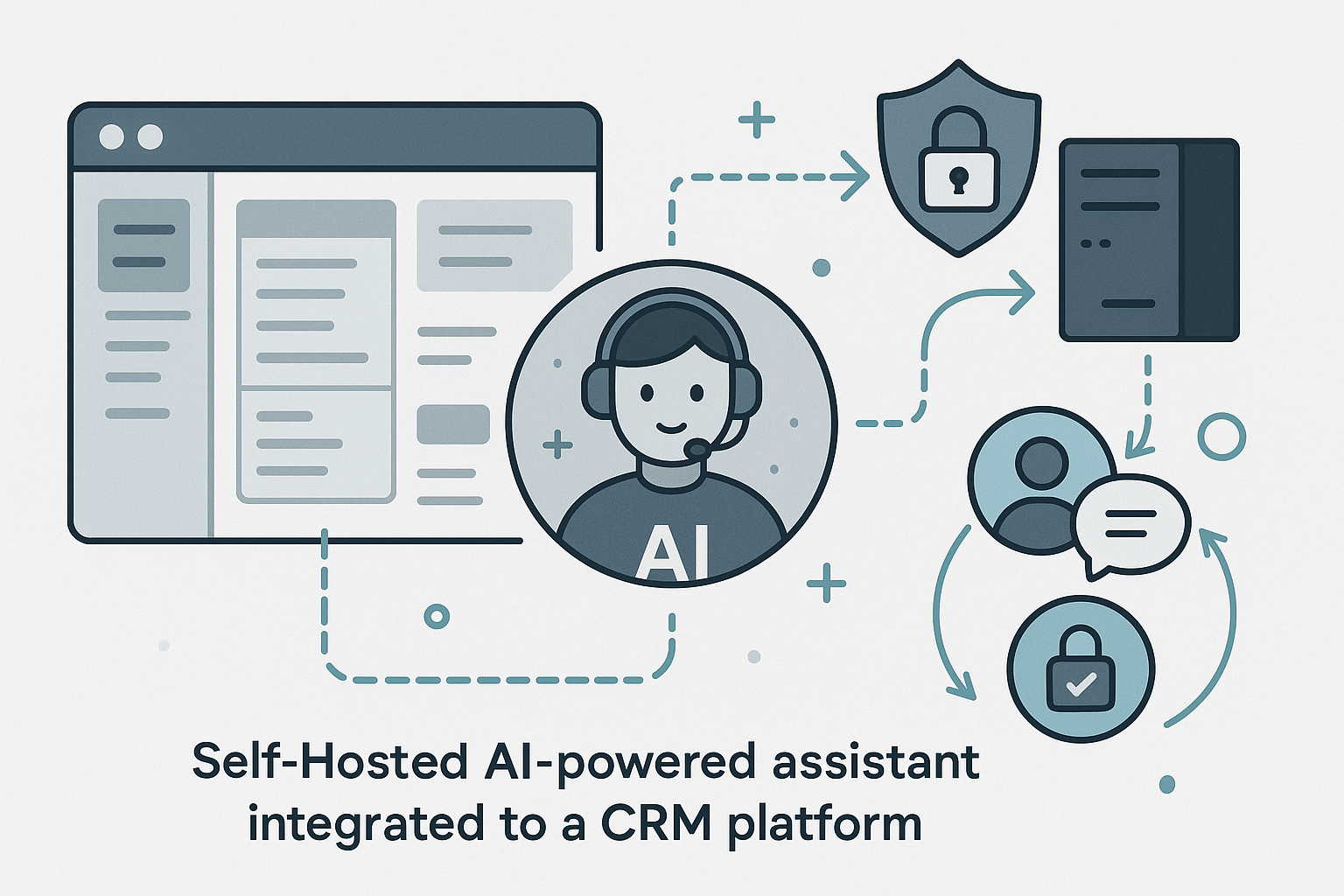

In today’s fast-paced business environment, customer support is paramount. Companies constantly seek innovative ways to provide real-time, accurate answers to their clients. While many turn to cutting-edge AI solutions, what happens when your data is too sensitive for the public cloud? This was the exact dilemma a private organization recently faced, and we helped them navigate it by building a secure, self-hosted Generative AI knowledge base directly within their private infrastructure.

The Challenge:

Data Security Meets Real-time Demands

Imagine a CRM system where customer service executives are inundated with complex queries. They need instant access to a vast internal knowledge base, but every piece of information is confidential. This organization couldn’t risk sending sensitive customer data to external AI services. Their executives were spending valuable time sifting through documents, leading to slower response times and potential inconsistencies.

The core hurdles were clear:

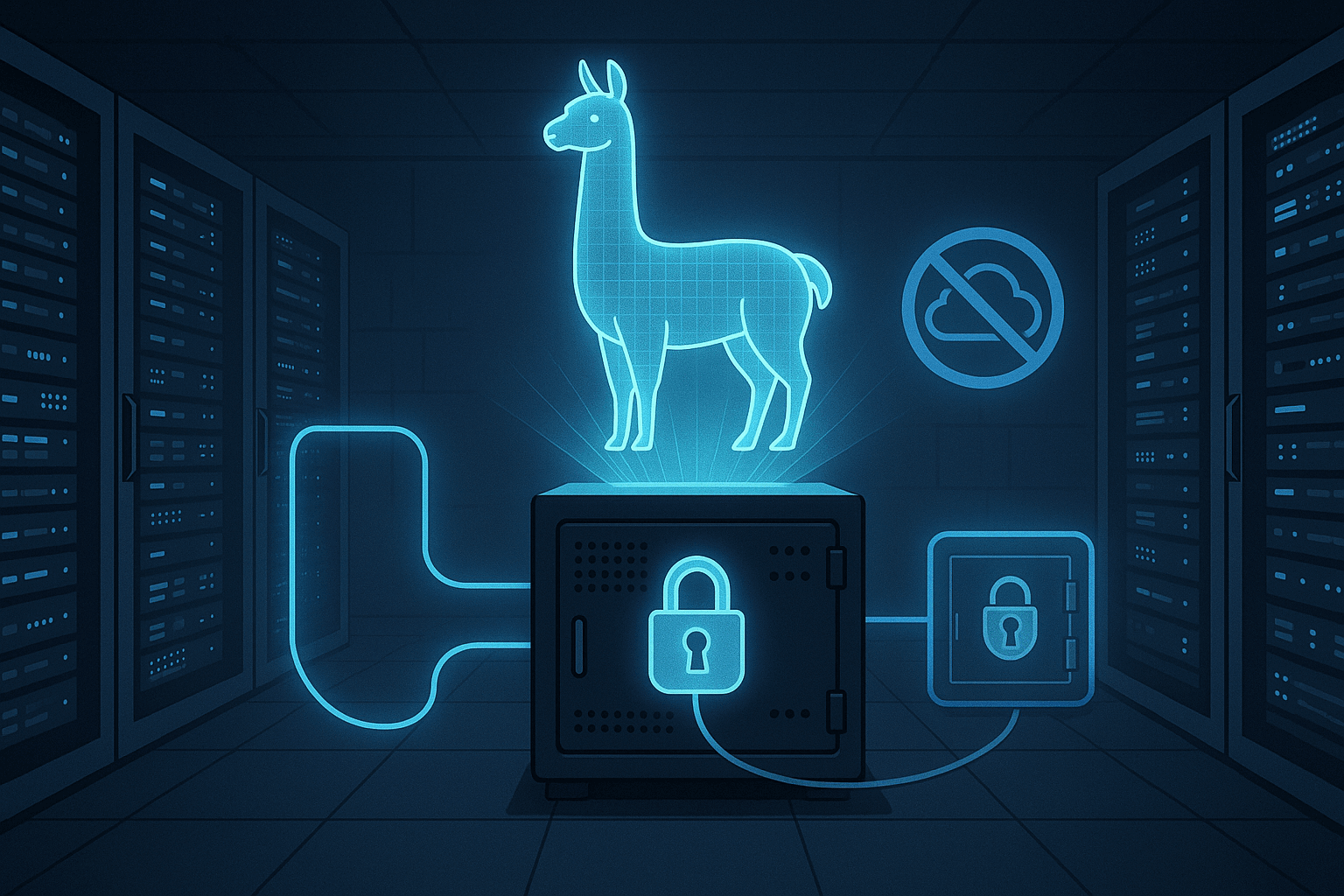

- Top-Tier Data Security: Confidentiality wasn’t just a preference; it was a non-negotiable requirement. Public cloud AI was simply out of the question.

- Instant Information Access: Manual searches were inefficient. Executives needed answers at their fingertips, right when the customer asked.

- Bridging Information Gaps: Critical data was scattered across various internal documents, creating silos that hindered efficient information retrieval.

- Scalability for Growth: The solution had to grow with the organization, handling an ever-increasing volume of data and queries.

Our Solution:

A Private Cloud, Llama-Powered AI Solution

Our team stepped in to design and implement a bespoke Generative AI knowledge base solution, entirely deployed within the client’s private cloud. This ensured complete data isolation and rigid adherence to their security policies.

Here’s how we did it:

- Bringing LLMs In-House: We bypassed external dependencies by deploying a robust, recent version of the Llama Large Language Model (LLM) directly onto the client’s private servers. This gave them unprecedented control and eliminated any data transfer risks.

- Training Llama on Their Terms: The magic truly happened when we fine-tuned the Llama model using the client’s own proprietary internal data. This rigorous training process taught the AI the organization’s unique terminology, business context, and information structure, making it an expert in their domain.

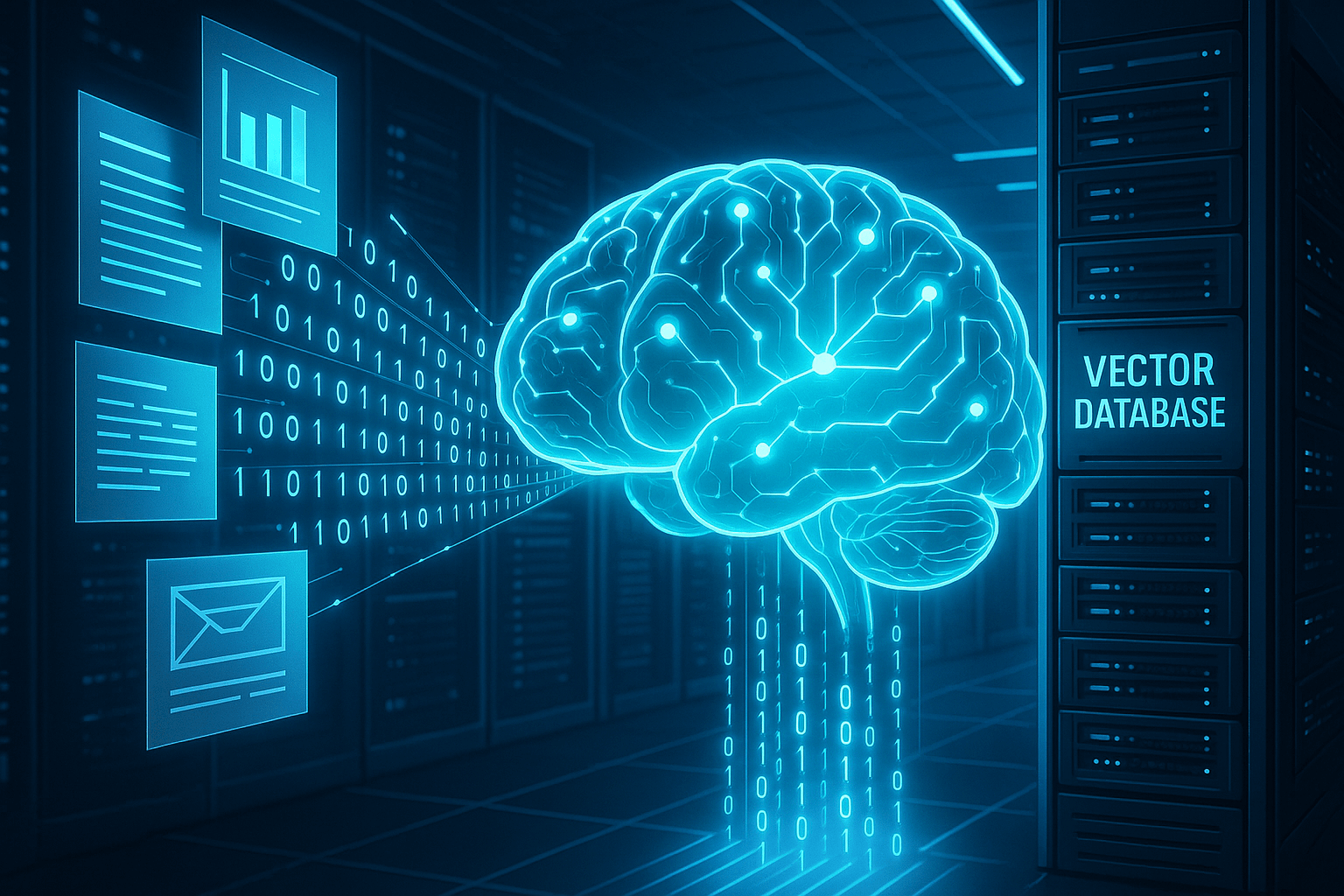

- Building a Dedicated Brain for Data: We established a separate, specialized vector database. This database was meticulously populated with numerical representations (embeddings) of every piece of the client’s internal knowledge base. It became the AI’s “brain” for quickly finding relevant information.

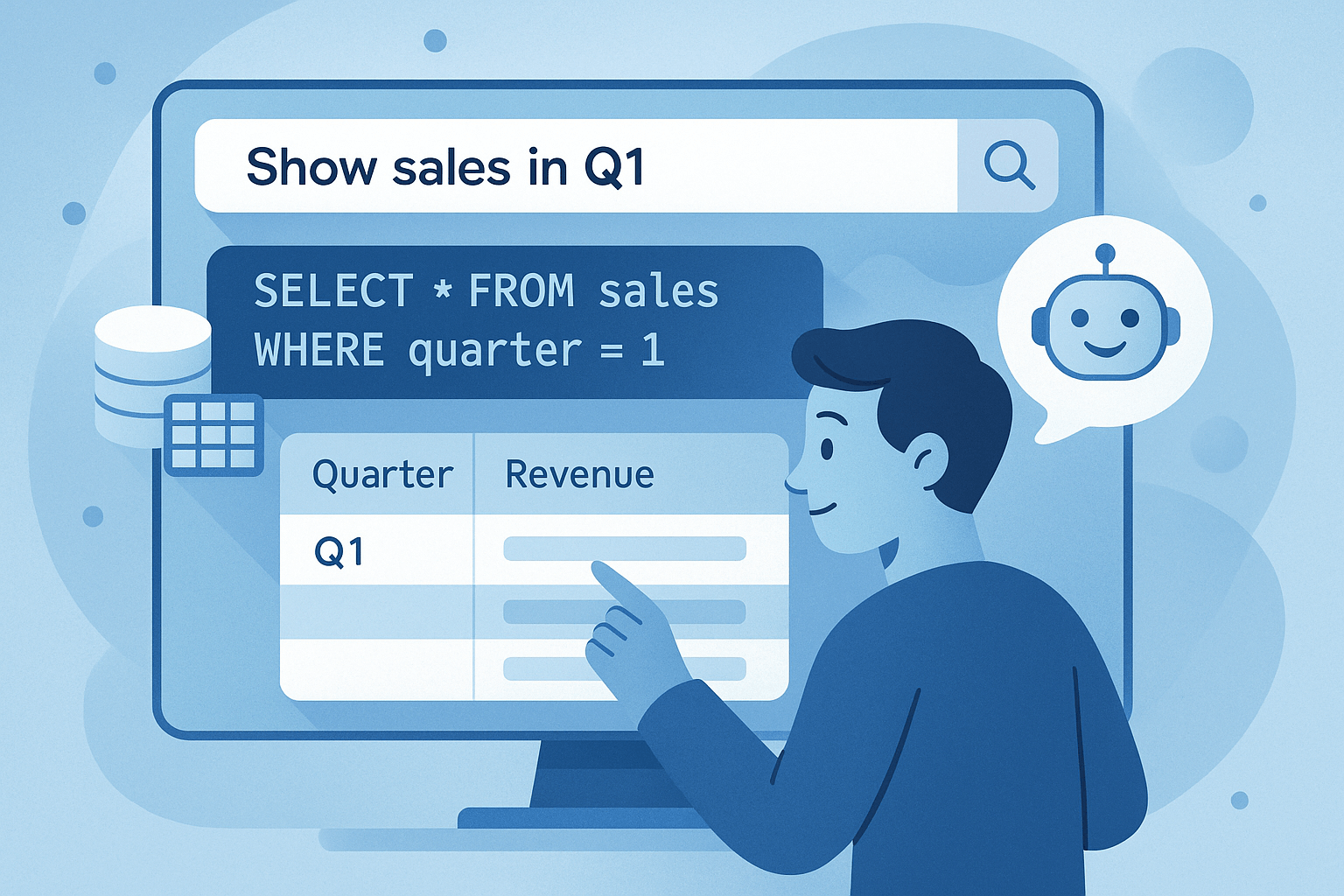

- The Intelligent Query Engine: Your Virtual Assistant: We developed a custom engine that served as the bridge between CRM executives and their new AI assistant. When an executive asked a question, the engine sprang into action:

- It understood the meaning of the question (semantic search).

- It rapidly pulled the most relevant documents from the vector database.

- Finally, it fed this retrieved context, along with the original question, into the fine-tuned Llama model, which then generated accurate, concise, and real-time answers.

This integrated approach transformed their customer service, empowering executives with an intelligent virtual assistant seamlessly embedded into their daily CRM workflow.

The Tech Behind the Breakthrough

The success of this highly secure solution was built upon a carefully selected and integrated technology stack, all configured for peak performance within a private cloud environment:

- Core AI Model: Llama (latest stable version) – The generative powerhouse, deployed directly on dedicated GPU servers.

- Training & Adaptation: PyTorch / Hugging Face Transformers – For efficient and deep fine-tuning of Llama with proprietary data.

- Lightning-Fast Search: Faiss (or similar open-source vector index like Milvus/Chroma) – Our chosen vector database for rapid, high-dimensional similarity search.

- Text-to-Vector Conversion: Sentence-BERT – The model responsible for converting all text (documents and queries) into numerical vectors the AI could understand.

- Application Backbone: Python with FastAPI or Flask – Powering the intelligent query engine and handling all API interactions.

- Data Organization: PostgreSQL or MongoDB – For managing metadata and non-vectorial aspects of the knowledge base.

- Deployment & Scalability: Docker / Kubernetes – Ensuring robust packaging, deployment, and effortless scaling of all AI components.

- Secure Foundation: Client’s Private Cloud Servers – The bedrock hosting all computational and storage resources, guaranteeing absolute data residency.

- Seamless Connection: RESTful API – The secure interface enabling smooth communication between the existing CRM and our new AI knowledge base.

The Impact:

Secure, Smart, and Scalable Support

By bringing Generative AI into their private domain, this organization not only enhanced their customer service capabilities but also reinforced their commitment to data security. The solution has drastically reduced response times, improved the accuracy of information provided, and empowered their CRM executives with an invaluable tool. This case demonstrates that advanced AI capabilities don’t always require a leap into the public cloud; with strategic design and implementation, they can thrive securely within your own controlled environment.

Related Case Studies

SLA Financials