Dragonfly DB Over Redis:The Future of In-Memory Datastores

Dragonfly DB Over Redis:The Future of In-Memory Datastores

In-memory databases have become a cornerstone of modern application development, providing ultra-fast data access by storing information in RAM rather than on slower disk-based storage. This architecture allows for millisecond-level response times, making in-memory databases ideal for use cases that require real-time data processing, caching, session management, and fast analytics. As data-driven applications continue to demand higher performance, the choice of the right in-memory database becomes crucial.

What is Redis?

Redis is an open-source, in-memory data structure store that can be used in various capacities, including:

- Database

- Message broker

- Cache

- Streaming engine

Redis is widely regarded for its speed, flexibility, and powerful features, making it suitable for a wide range of use cases. Let’s dive deeper into what makes Redis so special.

Redis Data Structures

- One of Redis’ core strengths is the variety of data structures it supports, including:

- Hashes

- Lists

- Sets

- Sorted Sets

- Bitmaps

- Geo-spatial indexes (A technique used in databases to efficiently store and retrieve data based on their geographic location)

- Streams

- These data structures can be utilised to build a wide range of applications, such as:

- Real-time chat applications

- Message buffers

- Gaming leader-boards

- Authentication session stores

- Media streaming platforms

- Real-time analytics dashboards

Why Redis is Special?

Redis stands out due to several key features that make it a powerful tool in the hands of developers.

Atomic Operations

Every operation in Redis is atomic. This means that once a command is executed, Redis ensures it completes fully before executing the next command, eliminating the risk of conflicts caused by concurrent operations.

For example, atomic operations include:

- Putting a key into the store

- Adding an element to a list

- Performing a set union or intersection

- Incrementing a value

Since Redis doesn’t context switch while executing a command, this makes operations predictable and reliable.

In-Memory Data Storage

Redis is an in-memory data store, meaning that all data is stored in RAM. This makes Redis exceptionally fast, with low-latency access to data. It is frequently used as a cache for this reason.

However, Redis also offers configurable persistence options for those who want to retain data even in the event of a system crash:

- Snapshotting: Periodically dump data to disk.

- Append-only file (AOF): A write-ahead log of all commands that can be replayed.

- No persistence: Data is kept in memory only, and if the system crashes, the data is lost.

This flexibility allows developers to configure Redis based on their specific requirements.

Rich Feature Set

Redis comes with several powerful features, including:

- Transactions: Ensure that multiple commands are executed as a single atomic operation.

- Pub/Sub messaging: Allows clients to subscribe to channels and receive real-time updates.

- TTL (Time-to-Live) on keys: Set expiration times on keys, which is useful for session management and cache invalidation.

- LRU eviction: Automatically evict the least recently used items when the cache reaches its memory limit.

These features make Redis incredibly versatile, whether you’re using it as a cache, message broker, or database.

Single-Threaded Architecture and I/O Multiplexing

Redis is single-threaded, which might sound like a limitation, but it’s actually one of its greatest strengths. Redis uses I/O multiplexing to handle multiple connections concurrently without the need for complex multi-threading.

How I/O Multiplexing Works:

When a network request is made, I/O system calls are generally blocked, meaning the process waits for data (e.g., reading from a socket) before proceeding. However, Redis avoids the inefficiency of multi-threading and locking mechanisms (like mutexes and semaphores) by using I/O multiplexing.

The idea behind I/O multiplexing is that Redis monitors multiple sockets and only reads from the ones that are ready, minimizing idle time and ensuring efficient resource usage. This allows Redis to handle a large number of concurrent TCP connections efficiently without the need for complex concurrency control.

What Is Dragonfly DB?

Founded in March 2022 by Oded Poncz and Roman Gershman, former engineers at Google, Dragonfly DB was created to solve the challenges of scaling Redis that they experienced firsthand. With dual headquarters in Tel Aviv and San Francisco, and a global team, Dragonfly is a revolutionary, drop-in replacement for Redis, designed to handle modern application workloads at scale.

Dragonfly excels at vertical scaling, supporting millions of operations per second and handling terabyte-sized datasets—all on a single instance. Fully compatible with Redis and Memcached APIs, it can be adopted with zero code changes. Compared to traditional in-memory datastores, Dragonfly offers 25x higher throughput, improved cache hit rates, and lower latency. Best of all, it can achieve these benefits while using up to 80% fewer resources for the same workload.

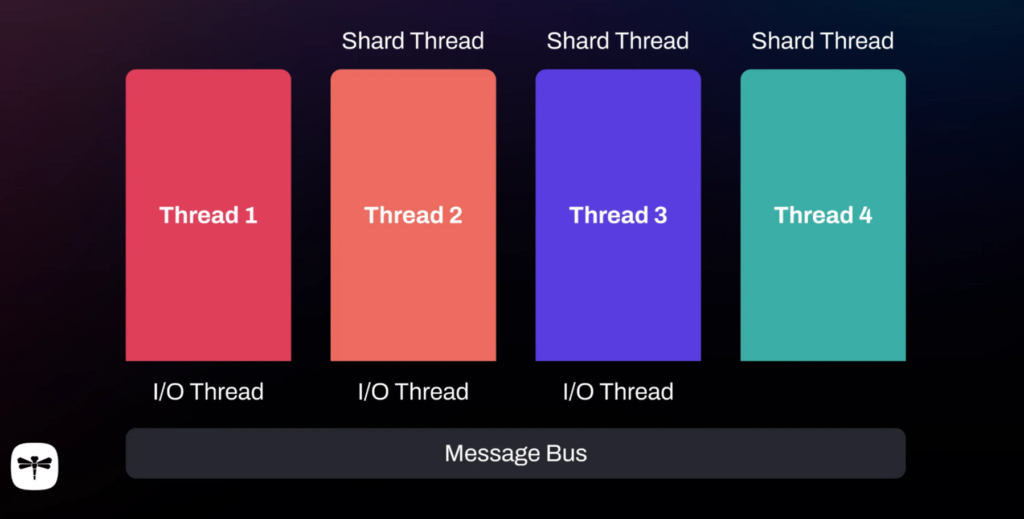

DragonflyDB and Redis: Multi-Threaded Efficiency Versus Single-Thread Simplicity

- Redis’s Single-Thread Approach

Redis relies on a single-threaded, event-driven model, which is simple and efficient for many workloads. However, as traffic grows, this single thread can become a bottleneck, limiting the system’s ability to scale efficiently. - DragonflyDB’s Multi-Threaded Architecture

DragonflyDB, by contrast, introduces a modern multi-threaded design. By splitting tasks across multiple threads, it can fully utilize multi-core processors, which significantly boosts throughput and reduces latency. - Sharding for Parallel Processing

DragonflyDB divides its data into independent segments, or shards. Each shard is handled by its own dedicated thread, allowing requests to be processed in parallel and avoiding the bottleneck of Redis’s single-thread model. - Minimal Locking for Higher Efficiency

This architecture also reduces the need for complex locking mechanisms, as each key is managed by a single thread within its shard. This approach minimizes contention, boosting performance and reducing delays. - Asynchronous Operations for Responsiveness

To further enhance responsiveness, DragonflyDB performs asynchronous operations using technologies likeio_uringfor disk tasks. This way, long-running jobs, such as backups, don’t slow down other operations, keeping the system responsive even during heavy loads.

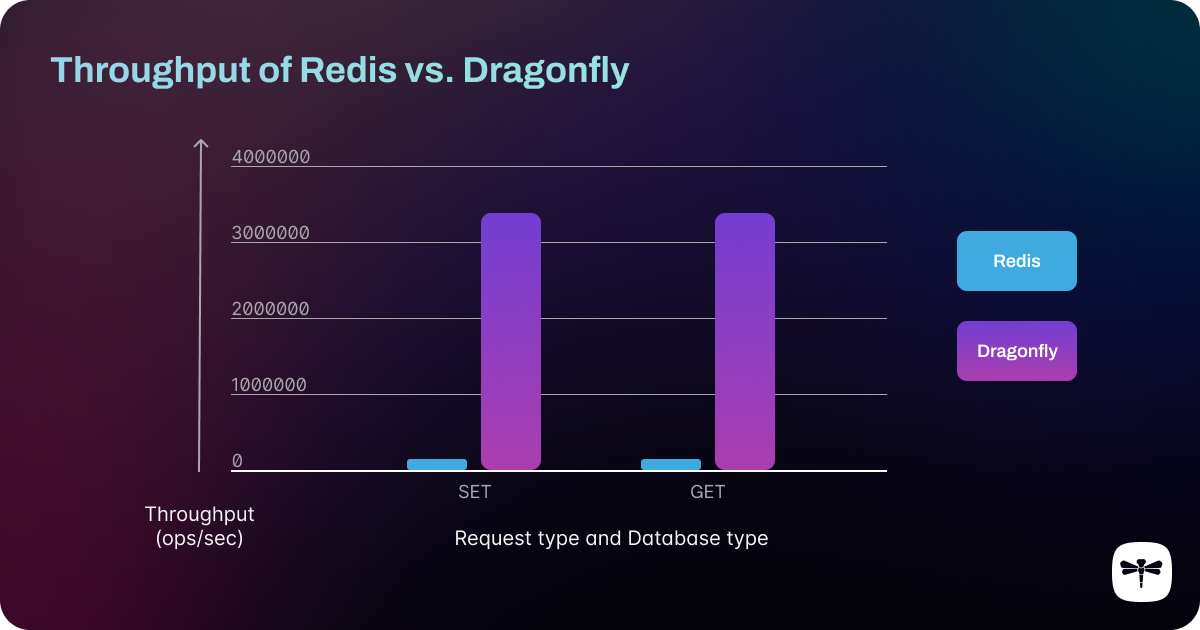

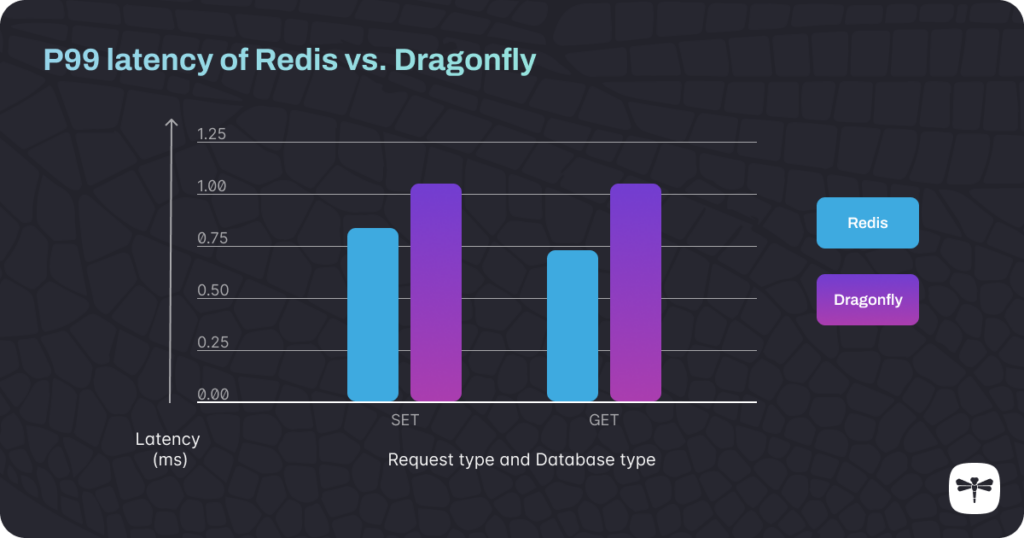

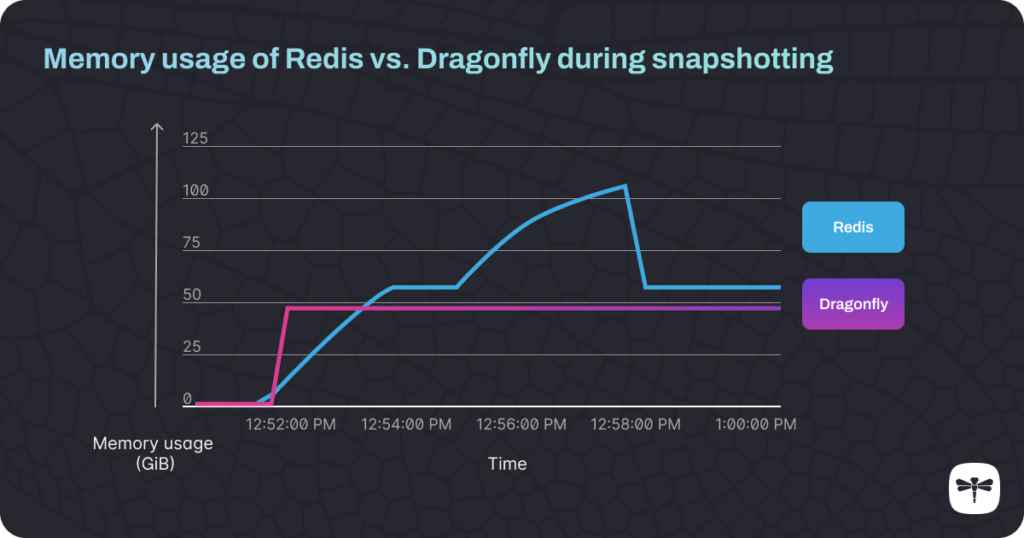

Benchmarking Comparison: Dragonfly DB vs. Redis

To highlight Dragonfly’s significant performance advantage over Redis, we conducted benchmark tests using a single instance of each. Below is a summary of our findings across three key metrics: throughput, latency, and memory efficiency during the snapshotting process.

Test #1: Throughput

Throughput measures the number of operations a database can handle per second, making it a critical performance metric. In our tests, we populated both Redis and Dragonfly databases with 10 million keys and used memtier_benchmark to simulate real-world usage.

Test #2: Latency

Latency, especially tail latency (P99), measures how long the slowest requests take to complete. This is crucial when high throughput may cause delays in operations. P99 latency focuses on the longest 1% of requests, which can impact user experience if they take too long.

Test #3: Memory Efficiency During Snapshotting

Snapshotting is a known performance bottleneck for Redis, as it redirects significant memory resources during the process. Dragonfly, by contrast, handles snapshotting far more efficiently.

Conclusion: Redis vs. Dragonfly — A Clear Winner for Performance and Scalability

As demonstrated by our benchmarking results, Dragonfly delivers a significant performance boost over Redis. With up to 25X higher throughput and negligible increases in latency, Dragonfly proves itself as a superior option for demanding workloads. Its efficient snapshotting process, which avoids the memory spikes seen in Redis, further highlights its robustness for real-world applications.

Whether you’re launching a new application or scaling an existing Redis deployment, Dragonfly offers a seamless, high-performance alternative. Fully compatible with Redis and requiring no code changes, Dragonfly provides effortless scalability and long-term resource efficiency. Its modern architecture ensures that it can grow alongside your product without suffering from the resource exhaustion that can plague Redis.

For developers looking for an easy, drop-in replacement with better performance, Dragonfly is the clear choice. It’s a simple solution to Redis’ scalability challenges, allowing you to start small and grow without compromising on speed or reliability.

Resources:

Related content

Auriga: Leveling Up for Enterprise Growth!

Auriga’s journey began in 2010 crafting products for India’s