Python Parallel Processing

- General

- Performance and Security

Python Parallel Processing

“ If you can’t explain it simply, you don’t understand it well enough. ”

INTRODUCTION OF PARALLEL PROCESSING

In the world of Python programming, efficient execution of tasks is essential for building high-performance applications. Threads and Python parallel processing are two powerful techniques that can help you achieve parallelism in your code, improving its speed and responsiveness. However, understanding when and how to use threads and multiprocessing can be challenging. “Demystifying Threads in Python” will be a beginner friendly blog that will attempt to help you get started in the world of parallel processing .

TABLE OF CONTENTS

- Concurrency AND Parallel Processing

- Concurrency

- Multiprocessing / Parallel Processing

- Threads vs Processes

- How’s What and Why’s of Parallel Processing

- When to use threads

- When not to use threads

- Things to keep in mind while using threads

- The Anti Example : Calculate Prime : Building Up

- The synchronised way

- The concurrent way 1

- The concurrent way 2

- The final concurrent way

- Concurrent And Futures of Parallel Processing

- Concurrent

- Futures

- Conclusion

- References

CONCURRENCY AND PARALLEL PROCESSING

Concurrency

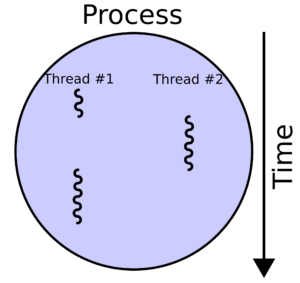

Concurrency refers to the ability of a computer to execute multiple instructions at the same time . It seems like more than one task is being executed simultaneously .

What actually happens behind the scenes is that the CPU jumps from one task to another so fast that from a human perspective , it seems like multiple tasks are being executed parallelly. In actuality ,the CPU just switches context from one task to another and divides time to each task and if we observe carefully, then at a particular moment only one task will be running in the background.

Multiprocessing / Parallel Processing

Multiprocessing is true parallelism . Our CPU is divided into cores and each core independently can handle a task . Hence , in an Ideal scenario , for a 4 core processor , we can have 4 task running in parallel . Multiprocessing is the ability of computers to use these cores properly in order to achieve true parallelism .

THREADS VS PROCESSES

Let’s start from the beginning . The smallest unit of execution in a process is an instruction. For example , adding two numbers is an instruction that we give to a computer .

Threads are a higher form of abstraction and simply putting ,are a sequence of instructions . They can run independently of other threads in the same process . Threads of same process share the same memory space ,allowing them to share data and resources

Processes are independent units of execution and consist of multiple threads . They have their own memory space . Processes are isolated from each other, meaning that a crash or error in one process generally does not affect other processes.

HOW’S , WHAT’s AND WHY’S OF PARALLEL PROCESSING

Let’s continue to explore threads as it is important to understand when to use them . Later ,in this section we will dive into examples

WHEN TO USE THREADS 🏴:

Threads have their primary importance in I/O bound use cases or user input cases.

Threads are highly effective for tasks that spend a significant amount of time waiting for input/output operations to complete. Examples include reading/writing files, network communication, and database queries.

WHEN NOT TO USE THREADS 🚩:

It is important to understand that there will be no significant reduction in execution time ,if we use threads in tasks that are mostly CPU bound . What is meant by CPU bound is that tasks that require complex calculations or basically that tasks that need most of the CPU processing power . In these cases , there is also a possibility that execution time for the tasks might increase . As CPU jumps from one thread to another , dividing time between them , then the time taken to switch context will also add to execution time of the task .

THINGS TO KEEP IN MIND WHILE USING THREADS ⚠:

There are multiple things to keep in mind while using thread . The most important is Race Condition .

Race Conditions: In a multithreaded environment, when multiple threads access shared data simultaneously and at least one of them modifies the data, it can lead to race conditions. Race conditions result in unpredictable and potentially erroneous behavior .

Some other things like Deadlock , Starvation also need to be kept in mind and one can read more about them from Mastering Concurrency in Python: Create faster programs using concurrency, asynchronous, multithreading, and parallel programming eBook : Nguyen, Quan: Amazon.in: Books .

THE ANTI EXAMPLE OF PARALLEL PROCESSING: Calculate Prime – Building UP

We will build up with a simple problem . We will try to find whether a number is prime or not . We will explain the code as we go along . In between we will clear the air on why it is an anti example .

The Synchronized Way :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

import math class PrimeCheck: def __init__(self, data): self.prime_list = data # Function to check if a number is prime def is_prime(self, data: int): if data == 2 or data == 3: print(f'{data} is prime') elif data <= 1: print(f 'Are you kidding me') else: t = int(math.sqrt(data)) flag = 0 # Check for factors for i in range(2, t + 1): if data % i == 0: flag = 1 break if flag: print(f'{data} is not prime') else: print(f'{data} is prime') # Function to process a list of numbers and check if each is prime def prime_feeder(self): for each in self.prime_list: self.is_prime(each) prime_list = [1, 2, 23454, 5432, 123456543, 234567654321, 23456789876543254323456765765434, 65434567654321, 45654323456543, 234567654565432, 345654323456543, 45654312345432, 3456764323456543, 456543234565432, 542345654323456, 3456543234565432] # Create an instance of the PrimeCheck class and check for prime numbers in the list PrimeCheck(prime_list).prime_feeder() |

Let’s see if we can improve the execution speed by using threads .

The Concurrent Way 1 :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

import threading import time import math # Define a custom thread class for checking prime numbers class PrimeThread(threading.Thread): def __init__(self, x): super().__init__() self.x = x @staticmethod def is_prime(data: int): # Check if a number is prime if data == 2 or data == 3: print(f'{data} is prime') elif data <= 1: print(f 'Are you kidding me') else: t = int(math.sqrt(data)) flag = 0 # Check for factors to determine primality for i in range(2, t + 1): if data % i == 0: flag = 1 break if flag: print(f'{data} is not prime') else: print(f'{data} is prime') # This method runs when the thread is initialized and started def run(self): self.is_prime(self.x) # List of numbers to check for primality prime_list = [1, 2, 23454, 5432, 123456543, 234567654321, 23456789876543254323456765765434, 65434567654321, 45654323456543, 234567654565432, 345654323456543, 45654312345432, 3456764323456543, 456543234565432, 542345654323456, 3456543234565432] # Create a list to store thread objects threads = [] # Create and start a thread for each number in the prime_list for each in prime_list: new_thread = PrimeThread(each) new_thread.start() threads.append(new_thread) # Important: Wait for all threads to terminate before continuing with the main thread for thread in threads: thread.join() print("Whew..") |

start() : This method starts the initialized calling thread object by calling

the run() method

run(): This method is executed when a new thread is initialized and

started

isAlive() : This method returns a Boolean value, indicating whether the

calling thread object is currently executing

join() : This method waits for the calling thread object to terminate

before continuing to execute the rest of the program

One can refer to the docs to learn more about the thread methods . Thread-based parallelism — Python 3.12.0 documentation

The major thing to note is that for each number we have initialized a new thread , which is not a good practice . The .join() method waits for all the threads to finish their processing before terminating the main code . After executing the code , the execution time we get is 0.003693028003908694

This clears the air on an anti example . Here ,

Execution Time(Threading) > Execution_Time(Synchronous)

The increase in execution time can be justified . First of all , calculating prime involves CPU calculation and probably not a great example to introduce threading , and secondly , due to too many threads (one thread per number) ,most of the CPU time goes in context switching from one thread to another .

Let’s play around with this example and try to eliminate second difficulty , and let’s explore another way to do the same example with some modifications

The Concurrent Way 2 :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

# Import required libraries import threading import time import queue from math import sqrt # Define a list of input numbers to check for primality input_prime = [1, 2, 23454, 5432, 123456543, 234567654321, 23456789876543254323456765765434, 65434567654321, 45654323456543, 234567654565432, 345654323456543, 45654312345432, 3456764323456543, 456543234565432, 542345654323456, 3456543234565432] # Create an empty list to store the results of primality checks output_prime = [] # Create a lock to ensure thread safety when updating the output_prime list lock = threading.Lock() # Create a queue to hold the input numbers q = queue.Queue() # Populate the queue with input numbers for nums in input_prime: q.put(nums) # Function to check if a number is prime def is_prime(num): if num == 0 or num == 1: return f'{num} is not prime' if num == 2 or num == 3: return f'{num} is prime' for i in range(2, int(sqrt(num)) + 1): if num % i == 0: return f'{num} is not prime' return f'{num} is prime' # Function to process numbers from the queue def process_number(): while not q.empty(): num = q.get() res = is_prime(num) with lock: output_prime.append(res) # Number of threads to use threads = 4 thread_list = [] # Record the start time for performance measurement start_time = time.perf_counter() # Create and start threads for processing numbers for _ in range(threads): th = threading.Thread(target=process_number) thread_list.append(th) th.start() # Wait for all threads to finish for th in thread_list: th.join() # Record the end time for performance measurement end_time = time.perf_counter() # Calculate and print the execution time print(end_time - start_time) # Print the results of primality checks print(*output_prime) # The End |

Execution time : 0.0011432539904490113

Now let’s delve deeper into code and , at last we will simplify the same example with concurrent and futures .

We have introduced lock from threading . This is because instead of printing the output , we are now storing the output in output prime . Now while writing output to output_prime , output_prime serves as the critical section . Hence lock becomes necessary in order to preserve output correctly . Now lock is served using context manager. The with statement helps threads to easily acquire and release the lock .

We have introduced another way to start thread using

th =threading.Thread(target = process_number)

Here we have not inherited from the threading class . Also we have maintained a queue that contains all the prime numbers. Queue is thread safe and a good way to consume the input .

At last , we have declared that we will use 4 threads to process these numbers . These all get started in the loop and all threads are joined so that we wait for all of them to finish before terminating the main code .

Finally , we move to a way below , that is currently practiced ..

The Final Concurrent Way :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

# Import the concurrent.futures module for thread management import concurrent.futures import time from math import sqrt # Define a list of input numbers to check for primality input_prime = [1, 2, 23454, 5432, 123456543, 234567654321, 23456789876543254323456765765434, 65434567654321, 45654323456543, 234567654565432, 345654323456543, 45654312345432, 3456764323456543, 456543234565432, 542345654323456, 3456543234565432] # Function to check if a number is prime def is_prime(num): if num == 0 or num == 1: return f'{num} is not prime' if num == 2 or num == 3: return f'{num} is prime' for i in range(2, int(sqrt(num)) + 1): if num % i == 0: return f'{num} is not prime' return f'{num} is prime' # Record the start time for performance measurement start_time = time.perf_counter() # Number of threads to use threads = 4 # Create a list to store the results of primality checks using concurrent.futures output_concurrent = [] # Use a ThreadPoolExecutor to parallelize the task of checking for primality with concurrent.futures.ThreadPoolExecutor(max_workers=threads) as executor: # Submit tasks for each input number to the executor and collect the resulting futures futures = [executor.submit(is_prime, each) for each in input_prime] # Iterate through the futures and retrieve the results for future in futures: output_concurrent.append(future.result()) # Calculate and print the execution time print(time.perf_counter() - start_time) # Print the results of primality checks using concurrent.futures print(*output_concurrent) |

Here we have used a ThreadPoolExecutor from the concurrent.futures module . We can easily specify thread workers in ThreadPoolExecutor as described above .

ThreadPoolExecutor handles synchronization internally . The ThreadPoolExecutor itself manages the synchronisation necessary for maintaining the order of results and ensuring that they are added to the list correctly. Hence we don’t need to acquire Lock in this specific case .

ThreadPoolExecutor provides a higher level of abstraction and eases out the way to manage Threads .

To learn more about about ThreadPoolExecutor , one can reference Thread-based parallelism — Python 3.12.0 documentation

Some more examples on threading can be found here . David Beazley – Python Concurrency From the Ground Up: LIVE! – PyCon 2015

The question remains , what are concurrent and futures anyways ?

CONCURRENT AND FUTURES OF PARALLEL PROCESSING

Concurrent:

In simple terms, “concurrent” means doing multiple things at the same time. Imagine you have a checklist of tasks to complete, like cooking dinner. Instead of doing one thing at a time, like chopping vegetables first and then cooking noodles, you decide to do both tasks together. So, you’re chopping vegetables and cooking noodles at the same time. This is a concurrent way of working.

In computer programming, concurrency is like having multiple workers (or threads) in your computer, and each worker can do a different task simultaneously. For example, one worker can play music, another can download a file, and another can run a game, all at the same time.

Futures (in the context of programming):

“Futures” in programming are like promises or to-do lists for tasks that you want to complete in the future. Imagine you have a list of chores to do over the weekend, like mowing the lawn and cleaning the house. Instead of doing them right away, you write them down on a list and say, “I’ll do these tasks later.” These tasks on your list are like “futures.”

In computer programming, “futures” are used to represent tasks that will be completed in the future, possibly concurrently. You can start a task and get a “future” object that says, “I’ll give you the result when I’m done.” You can then continue with other tasks or work while waiting for the results.

For example, if you want to download a big file from the internet, you can start the download and get a “future” object. While the download is happening in the background, you can continue using your computer for other things. When the download is finished, you can check the “future” to get the downloaded data.

To delve deeper into concurrent futures , I would suggest to read from Mastering Concurrency in Python: Create faster programs using concurrency, asynchronous, multithreading, and parallel programming eBook : Nguyen, Quan: Amazon.in: Books

CONCLUSION

With this we finish our discussion on threads . Hopefully this will help getting started with threads. Developers could gain insight when to use threads and what all things must be kept in mind while using them .

REFERENCES

R1) Python Threading Tutorial: Run Code Concurrently Using the Threading Module

R2) David Beazley – Python Concurrency From the Ground Up: LIVE! – PyCon 2015

R4) Google

R5) Thread-based parallelism — Python 3.12.0 documentation

Related content

Auriga: Leveling Up for Enterprise Growth!

Auriga’s journey began in 2010 crafting products for India’s