Why Docker is getting obsolete

- DevOps

- General

Why Docker is getting obsolete

Overview

Docker made the world familiar with containers , a technology that completely disrupted the it industry (Imagine spinning of a complete operating system in seconds) . But technology that enabled containerisation was available long before Docker came into existence all docker did was to make it way too easy to work with containers

But in this process docker overloaded itself with tons of features which were really required at that time to make it easy to work with containers. But now with modern containerisation tools and container orchestration services in place (such as Kubernetes and OpenShift ) docker provides too much then it’s needed to get things running. In this article we will see briefly what is containerisation, how does docker came into place and why it’s becoming obsolete.

Containerisation and it’s need

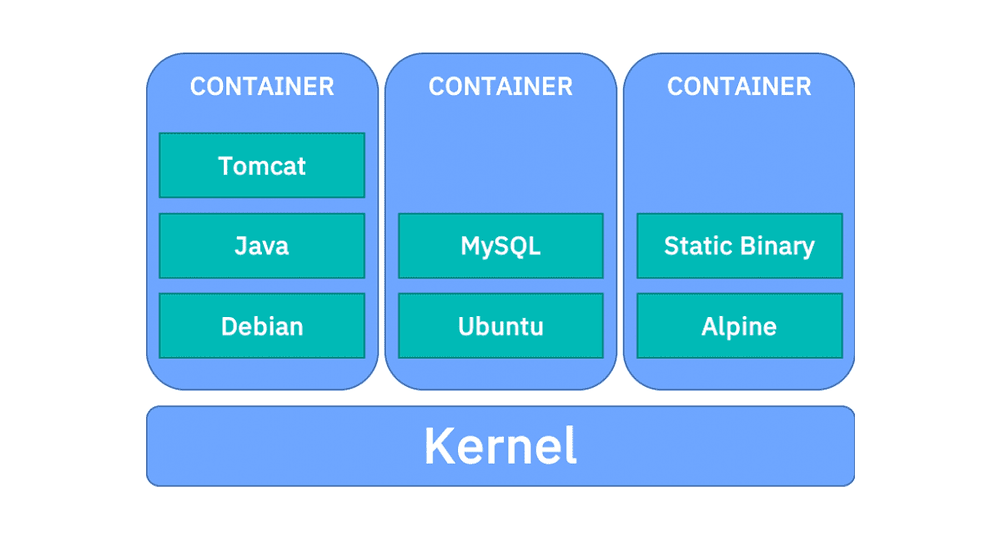

Containerisation is the packaging together of software code with all it’s necessary components like libraries, frameworks, and other dependencies and isolate this process completely from outside interference .

This may sound confusing so let’s take an example to understand it.

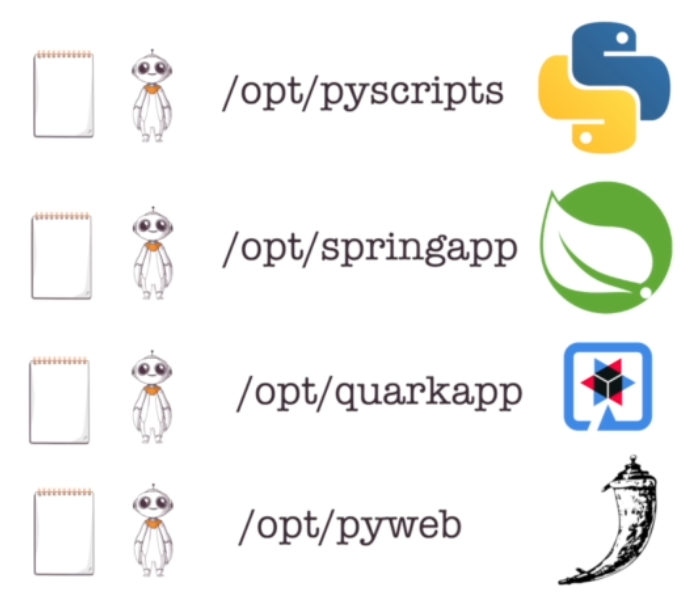

Let’s say you have a relatively big Linux server with 48Gb of RAM and 8 core CPU and a standard filesystem layout. Now your company doesn’t really have an application that needs all of these resources at once, Instead there are multiple applications that need to run on this single server

Lets say there is a Java web application and there are some Python scripts to be executed periodically.

You don’t want the run these applications under the root user, because that would mean that each application can do anything it wants on the server, including accessing the files and directories of the other application.

So, to isolate them from each other, you craft a beautiful directory layout, and then run each application under a different Linux user.To actually run the application you create new systemd services for each app, with cgroups making sure that system resources are managed properly.

It works pretty well for some time.First problems appear during the next patching. One of the Python applications relies on a now outdated system package.

You can’t update this package because the application will break. And you can’t leave this package as it is, because it puts the whole server, with all of the applications running there, at risk.

You tried to isolate each application as much as possible with the help of SELinux, cgroups and multi-user setup, but the final frontier the filesystem remains shared between all applications

If you start looking closer, you will notice a couple of other things that remain shared. For example, each application shares the same process table. Your Python application is well aware of the existence of the Java application running on the same server.

Your quest to properly isolate applications from each other becomes harder as you dive deeper into this plus this is all manual setup which means you have to perform all these heavy steps again and again. Wouldn’t it be great if there was a tool doing this stuff for you every time you need to completely isolate a process with all of it’s dependencies ?

This is where containerisation comes into place, container is just a Linux process that is isolated from the rest of the system with the help of the already mentioned and some extra tools. cgroups, Selinux or Apparmor, standard unix permissions, You probably don’t want to setup linux namespaces, cgroups and everything else from scratch for every new container you want to create. The tool does it for you.

Linux namespaces and Linux Capabilities all work together to isolate this process in such a way, that from inside the process, your application is not aware that it lives in the container. Container, then is nothing but a useful abstraction to describe a process that is so isolated from every other process

Benefits of Containerisation

Containerisation offers a lot of benefits to developers and development teams. Some of the following are :-

Portability: A container creates an executable package of software that is abstracted away from (not tied to or dependent upon) the host operating system, and hence, is portable and able to run uniformly and consistently across any platform

Agility:The container ecosystem has shifted to engines managed by the Open Container Initiative (OCI). Software developers can continue using agile or DevOps tools and processes for rapid application development and enhancement.

Efficiency:Software running in containerised environments shares the machine’s OS kernel, and application layers within a container can be shared across containers. Thus, containers are inherently smaller in capacity than a VM and require less start-up time.

Security: The isolation of applications as containers inherently prevents the invasion of malicious code from affecting other containers or the host system. Additionally, security permissions can be defined to automatically block unwanted components from entering containers or limit communications with unnecessary resources.

What is Docker

After getting a hold on containers we can finally understand what docker is all about. Docker came into existence in 2013 and is considered a pioneer in containerisation world but does that means that containerising a process was not possible before docker ?

There was no lack of various ways to isolate system resources before the initial Docker release in 2013. LXC appeared back in 2008, chroot command appeared decades ago, Solaris zones were released in 2005 and FreeBSD jails exist since 2000.

But, just like virtualisation, containerisation was lacking in standardisation. There was no open standard, just lots of different approaches and technologies around the general concept of “resource isolation without the full virtualisation”.

This is where docker came into place, Docker is a container management tool its a complete package for managing everything related to containers (In fact the tool that actually runs the container is only one of the many components of docker ). Docker focused on the ease of use and the development experience it became very convenient to spin up containers, build container images and, shortly after that, deploy them, even to production.

In fact, it was so enjoyable to use Docker, that the adoption of containers exploded. As a result of this explosion,the line between what containers are and what Docker is became very blurred.

Containerised application deployment and everything wrong with docker

There seems to be two main problems around containerised application deployment in the industry as follows

- Software companies are now deploying thousands of container instances daily, and that’s a complexity of scale they have to manage.

- In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime. For example, if a container goes down, another container needs to be started.

To solve these problems we need a container orchestration tool which is built over container engines, A container orchestration platform automates the installation, scaling, and management of containerised workloads and services.

Container orchestration platforms can ease management tasks such as scaling containerised apps, rolling out new versions of apps, and providing monitoring, logging and debugging, among other functions.

Kubernetes, has established itself as the defacto standard for container orchestration(originally open-sourced by Google, based on their internal project called Borg) that automates Linux container functions originally. Kubernetes works with many container engines, such as Docker (Now Deprecated with the 1.20 release), but it also works with any container system that conforms to the Open Container Initiative (OCI) standards for container image formats and runtimes.

Docker also ships with a similar product called Docker Swarm but if we compare it with Kubernetes then it’s capabilities are far less then required to solve the industry use cases and it’s limited to using docker as a container engine.

Same as docker Kubernetes made it so easy to run, manage and automate containerised application deployment that everyone started using it for deploying there apps instead of bare-bones container engines such as docker or cri-o

Now let’s have a look at some major drawbacks of using docker today as a container runtime

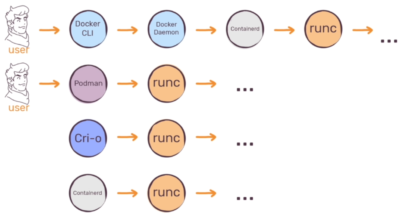

No more supported by Kubernetes :- Its a major drawback as almost all the container workloads are being deployed on kubernetes today but Kubernetes has supported using Docker a container runtime up to this point, so why are they choosing to stop supporting it. Kubernetes works with all container runtimes that implement a standard known as the Container Runtime Interface (CRI). This is essentially a standard way of communicating between Kubernetes and the container runtime, and any runtime that supports this standard automatically works with Kubernetes. Docker does not implement the Container Runtime Interface (CRI). In the past, there weren’t as many good options for container runtimes, and Kubernetes implemented the Docker shim, an additional layer to serve as an interface between Kubernetes and Docker. Now, however, there are plenty of runtimes available that implement the CRI (CRI-O, podman, rkt etc ), and it no longer makes sense for Kubernetes to maintain special support for Docker

Has a complex architecture: – Docker was released in 2013 when there was no available standards for using containers docker has to provide everything to make it super easy to work with containers and hence it chose an architecture that looked good at that time but now with modern containerisation tools such as podman and cri-o it seems that docker is becoming complex to use as running a daemon comes with its own complications

Does not Support Rootless Containers: – Rootless containers neutralise the risks of holding privileges while continuing to allow users unrestricted container access. Inside a rootless container, the code appears to be running as root and can therefore perform privileged tasks that would otherwise not be permitted. But the host sees the container as a regular user and does not acknowledge any privileges. If a hacker does manage to break into the container, they will not be able to carry forward any root privileges to the host machine. Docker does not fully support rootless containers yet as other container runtimes such as podman does although support was added in version 20 it requires lots of manual configuration and is not full proof.

Docker has performance problems on non-native environments: – Linux kernel features enabled the containerisation technology although docker supports running container on windows and mac docker still requires the actual Linux kernel (usually Ubuntu) in order to perform its operations. What Docker for Mac provides for you is an abstracted VM containing the kernel, which you never interact with directly. You will interact with the containers within that VM, but they are networked together with your host in a way that you will rarely need any information about the VM itself.

Provides too much when it comes to container orchestration platforms:- Probably one more reason why kubernetes stopped supporting docker, storage, network, configuration, orchestration etc everything is handled by kubernetes but since docker pre dates any of the container orchestration tool it provided all of these which are not as mature as kubernetes and are not required. Tools like kubernetes only need a runtime which can spawn a container for it from an image that’s it everything else is managed by kubernetes itself hence docker is kind of a bloatware for orchestration tools when lighter alternatives such as CRI-O are available.

All of these drawbacks were not prominent when docker was launched into the market because back then there was nothing around containers but as time grew more sophisticated tools came into the market which made using docker over them questionable.

Conclusion

At last I would just like to point out that its not an end for docker and I am not trying to emphasise that docker is bad in any way Docker is a great tool and it has dramatically improved the developer experience. If there is a single tool that has sky rocketed the container usage in the world, its Docker. Most of the containerised application stacks use docker up to this date.

There is nothing bad with the Docker it was and it still is a great tool, and the company behind it keeps improving this product, from both the usability and security points of view. It’s just that it does seems to fit quite well in modern workloads as compared to other tools in the market.

I would strongly recommend everyone reading this blog to try and go dockerless (using great alternatives such as podman, cri-o and rkt) and try to look at containers without looking at them through the Docker-lense cheers!!

Related content

Auriga: Leveling Up for Enterprise Growth!

Auriga’s journey began in 2010 crafting products for India’s