Natural Language Querying for Relational Databases

- Data, AI & Analytics

In today’s data-driven world, organizations collect and store an ever-increasing volume of invaluable, mission-critical data within complex relational databases. This data encompasses everything from detailed customer transaction histories and intricate supply chain logistics to comprehensive sales performance metrics and granular operational telemetry. However, the inherent challenge lies in unlocking the true potential of this data. Accessing it typically demands specialized expertise in SQL (Structured Query Language) and adherence to stringent database access permissions. This inherent technical barrier creates a significant bottleneck, not only delaying the derivation of crucial business insights but also increasing an unsustainable reliance on a limited number of highly skilled technical teams, such as data analysts or database administrators.

The Challenge: Unlocking Insights from Complex Relational Data

Our client, a large organization, faced this extensive challenge. Their rich numeric data — sales, customer demographics, operational metrics — was cumbersome to access and interpret:

- SQL Expertise Barrier: Non-technical business users, despite strategic questions, lacked SQL knowledge. Marketing, finance, and operations couldn’t independently pull reports.

- Access Permissions Bureaucracy: Gaining database access involved lengthy approval processes, delaying urgent insights.

- Over-reliance on Data Teams: Business units relied heavily on IT or data analytics teams for even simple reports, diverting valuable technical resources from strategic projects.

- Time-Consuming Insights Cycle: The question-to-answer cycle took hours or days, hindering agile decision-making in dynamic markets.

- Complexity of Multi-Table Joins: Valuable insights often required joining data across many tables, a challenging task for non-experts.

“The core problem was a pervasive lack of democratized, intuitive, and timely access to data-driven insights across the organization.”

The Solution:

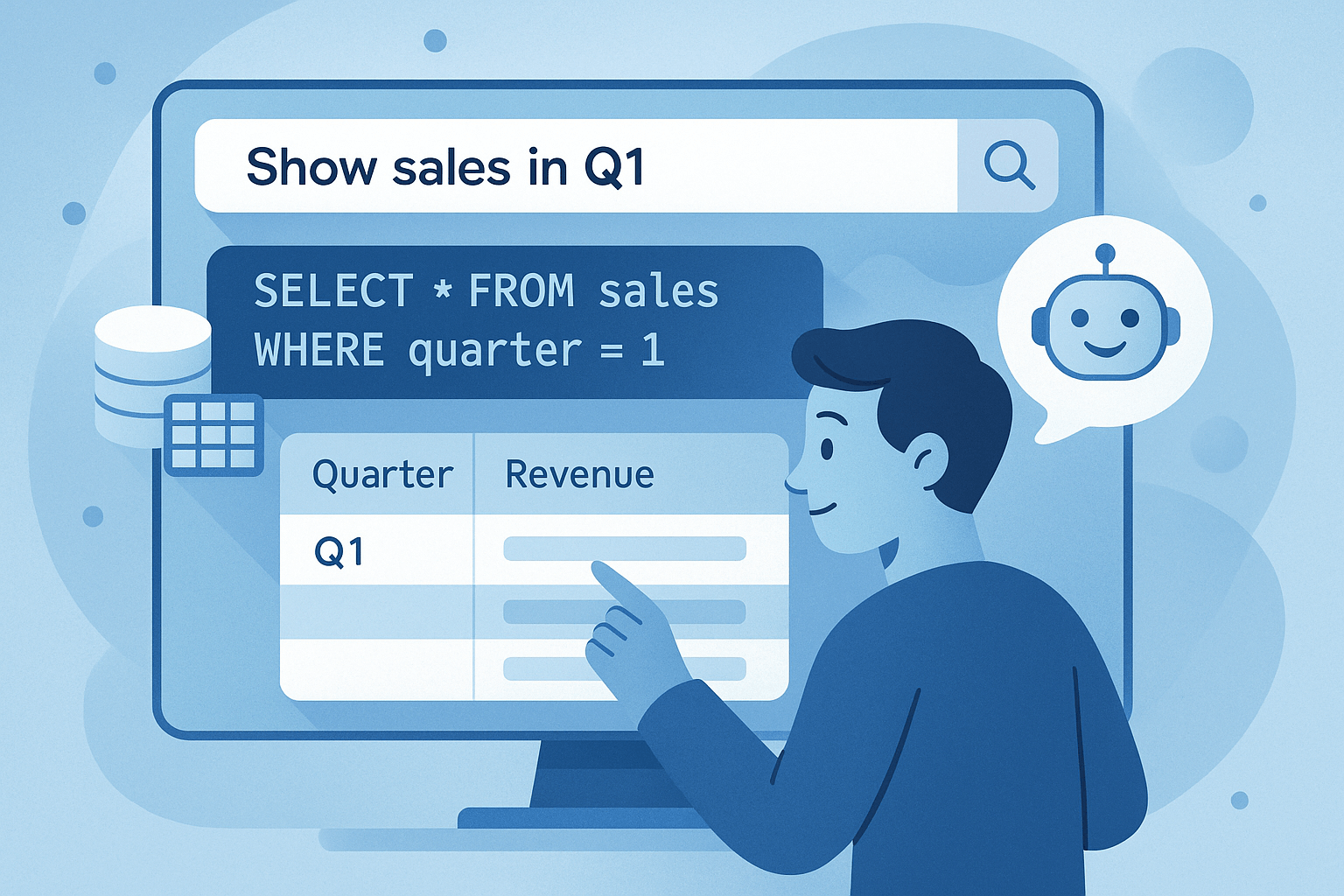

An LLM-Powered Natural Language Querying Engine

To address these pain points, we engineered an innovative querying engine powered by cutting-edge Large Language Models (LLMs). This secure, intelligent, and user-friendly intermediary seamlessly connects business users with their relational databases. It fundamentally transforms data interaction, allowing users to extract complex insights by simply asking questions in natural language, eliminating the need for SQL expertise or direct database access, and empowering every user to be a data explorer.

Here’s how our transformative solution functions:

- Natural Language Input: Users type questions in plain English via an intuitive web interface, like “What were the total sales of product X in Q3 last year by region?”

- Intelligent SQL Generation: An advanced LLM interprets the natural language query, leveraging database schema knowledge to accurately translate it into complex, optimized SQL, handling joins, aggregations, filtering, and ordering. It manages ambiguities and synonyms for precise output.

- Secure Database Connection & Execution: The validated SQL query is securely executed against the database with pre-configured, limited permissions. This ensures authorized data access without credential exposure or unauthorized manipulation. All interactions are logged.

- Efficient Data Retrieval: The robust database connector swiftly fetches raw tabular results, optimized for performance and near-instantaneous feedback.

- Contextual Textual Response (Optional): For complex numeric results, the LLM processes the retrieved data, transforming it into clear, concise, and insightful textual summaries, making complex insights immediately understandable and actionable.For example, summarizing complex sales figures into a simple statement: “The total sales for product X in Q3 last year were $5.2 million, with the North region contributing 40%.” This makes complex numeric insights immediately understandable.

This end-to-end solution provides a seamless, intuitive, and inherently secure way for non-technical users to query and understand their organization’s data.

Technical Components and How We Built It

[Visual Component Suggestion: Diagram showing the architecture: User Interface -> API Gateway -> LLM Service (SQL Generator, Response Formatter) -> Database Schema Resolver -> Database Connector -> Relational Database. Icons for relevant technologies can be included.]

Building this sophisticated engine required a meticulously layered approach combining state-of-the-art LLM capabilities with robust, secure, and performant database interaction:

- Large Language Model (LLM): The intellectual core, a powerful foundational LLM (e.g., GPT-3.5/4 or Llama 2/3).

- Purpose: Understanding natural language, generating accurate SQL, and optionally converting raw data to human-readable text.

- Considerations: Selected for robust few-shot learning and reasoning; fine-tuned on client-specific data or synthetic datasets to enhance accuracy for unique schemas and business vernacular.

- Schema Understanding Module: Bridges natural language and database structure.

- Purpose: Provides the LLM with comprehensive, up-to-date database schema knowledge (table/column names, data types, relationships). Dynamically fetches schema changes.

- Implementation: Developed a module to regularly query and cache database metadata, dynamically injecting it into the LLM’s prompt as context.

- SQL Generation & Validation Logic: Ensures LLM output accuracy and security.

- Purpose: Checks LLM-generated SQL for syntax errors, logical consistency, and adherence to security policies (e.g., read-only access).

- Implementation: Utilized SQL parsing/validation libraries (e.g., sqlparse) and a custom rule engine. Extensive automated testing ensured query accuracy for various inputs.

- Database Connector: Securely connects to various relational databases.

- Purpose: Establishes secure connections and executes validated SQL queries against systems like PostgreSQL, MySQL, SQL Server, Oracle.

- Implementation: Leveraged robust standard database drivers (e.g., psycopg2, mysql-connector-python) within a secure backend service, with connection pooling for efficiency.

- Backend Application Framework: Orchestrates the entire process.

- Purpose: Manages user prompts, LLM interaction, schema module, SQL execution, and response delivery.

- Deployment: Built with a scalable Python framework (FastAPI or Flask), containerized with Docker, and deployed on secure internal servers or private cloud.

- User Interface (UI): The intuitive web-based interface for users.

- Purpose: Provides an easy input for questions and clear visualization of results.

- Implementation: Developed using modern JavaScript frameworks like React, focusing on UX, feedback, and accessibility, designed for integration into existing enterprise portals.

- Security & Access Control: Multi-layered approach to protect sensitive data.

- Purpose: Ensures data privacy, prevents unauthorized access, and mitigates malicious queries.

- Implementation: Database connector operates with least-privilege (read-only) permissions. All interactions and generated queries are logged for audit. Robust API keys, RBAC, and authentication secure communication channels. Data is encrypted in transit and at rest.

The Impact: Democratized Data Access and Accelerated Insights

The LLM-powered natural language querying engine profoundly benefited the client:

- Truly Democratized Data Access: Business users, regardless of technical background, can now directly query complex databases, fostering data literacy and self-service.

- Substantially Reduced Dependency on Skilled Workforce: SQL experts are freed from routine data retrieval, redirecting their expertise to strategic analytical projects and innovation.

- Dramatically Faster Decision-Making: Insights are generated in minutes, enabling agile, data-driven business decisions and rapid reaction to market changes.

- Significant Increase in Productivity: Departments independently explore data, leading to a noticeable uplift in productivity and efficiency.

- Enhanced Data Security Posture: Abstracting direct database access and centralizing query execution through the secure engine improved overall data security.

- Tangible Cost Efficiency: Reduced time from highly paid SQL experts and faster operational insights led to significant measurable cost savings.

The solution transformed data interaction, making complex insights accessible, intuitive, and actionable for everyone, accelerating business innovation.

Related Case Studies

SLA Financials